Definition

When we compute the time complexity T(n) of an algorithm we rarely get an exact result. That's fine – typically we are only interested in how fast T(n) is growing as a function of the input size n.

For example, if an algorithm increments each number in a list of length n, we might say: "This algorithm runs in O(n) time and performs O(1) work for each element".

#

Formal Definition

Let T(n) and f(n) be two positive functions. We write T(n) ∊ O(f(n)), and say that T(n) has order of f(n), if there are positive constants M and n₀ such that T(n) ≤ M·f(n) for all n ≥ n₀.

In essence: T(n) ∊ O(f(n)) means that T(n) doesn't grow faster than f(n).

Constant Time - O(1)

According to the definition, T(n) ∊ O(1) means that T(n) is smaller than some fixed constant for all large enough values of n. An algorithm with T(n) ∊ O(1) is said to have constant time complexity.

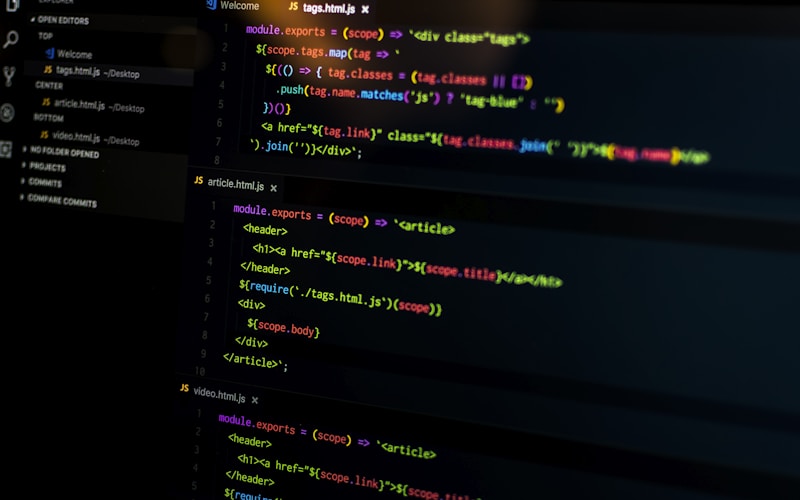

javascript

Copy

1// O(1) - Constant time 2function getFirst(arr) { 3 return arr[0]; 4} 5 6// No matter how big the array, this takes the same time

Linear Time - O(n)

An algorithm with T(n) = n - 1 can be written as T(n) ∊ O(n). An algorithm with T(n) ∊ O(n) is said to have linear time complexity.

javascript

Copy

1// O(n) - Linear time 2function findMax(arr) { 3 let max = arr[0]; 4 for (let i = 1; i < arr.length; i++) { 5 if (arr[i] > max) max = arr[i]; 6 } 7 return max; 8}

Quadratic Time - O(n²)

An algorithm with time complexity T(n) = n²/2 - n/2 becomes T(n) ∊ O(n²), and we say that the algorithm has quadratic time complexity.

javascript

Copy

1// O(n²) - Quadratic time 2function bubbleSort(arr) { 3 for (let i = 0; i < arr.length; i++) { 4 for (let j = 0; j < arr.length - 1; j++) { 5 if (arr[j] > arr[j + 1]) { 6 [arr[j], arr[j + 1]] = [arr[j + 1], arr[j]]; 7 } 8 } 9 }10 return arr;11}

Key Takeaways

| Complexity | Name | Status |

|------------|------|--------|

| O(1) | Constant | ✅ Excellent |

| O(log n) | Logarithmic | ✅ Great |

| O(n) | Linear | ✅ Good |

| O(n log n) | Linearithmic | ✅ Good |

| O(n²) | Quadratic | ⚠️ Fair |

| O(2ⁿ) | Exponential | ❌ Poor |

| O(n!) | Factorial | ❌ Terrible |

O(n log n) is Really Good

The first four complexities indicate an excellent algorithm. An algorithm with worst-case time complexity W(n) ∊ O(n log n) scales very well, since logarithms grow very slowly:

- log₂ 1,000 ≈ 10

- log₂ 1,000,000 ≈ 20

- log₂ 1,000,000,000 ≈ 30

In fact, O(n log n) time complexity is very close to linear – it takes roughly twice the time to solve a problem twice as big.

Ω(n²) is Pretty Bad

Algorithms with time complexity Ω(n²) are useful only for small input: n shouldn't be more than a few thousand.

10,000² = 100,000,000

An algorithm with quadratic time complexity scales poorly – if you increase the input size by a factor 10, the time increases by a factor 100.

Conclusion

Understanding Big O notation is fundamental for any developer. It helps you write efficient code and make informed decisions about algorithm selection.